Getting started with computer vision

The state of the art technology in the software industry today

| Introduction to Computer Vision |

A basic explanation of Computer Vision beyond all the media noise and glamour.

“If we want machine to think, we need to teach them to see”

I combine magic and science to create illusions. I work with new media and interactive technologies, things like artificial intelligence or computer vision, and integrate them in my magic.

With this is mind I welcome you in this oeuvre.

The prime purpose of this article is to explore the basic concepts, building blocks of computer vision.

Computer vision has been a popular reoccurring term for the past decade, although its popularity has oscillated over time from an unheard of subject to hot news. As a result of becoming a trending topic in recent years, the understanding of what Computer vision entails has been somewhat noisy. Therefore the purpose of this article is to break down the term Computer vision and analyse its component, thereby providing a baseline understanding of what Computer vision is.

Computer vision, is the key enabling technology for all of Artificial Intelligence.

“Understanding vision and building visual systems is really understanding intelligence,” And by see, I mean to understand, not just to record pixels.

Let’s just proceed with our Agenda now.

AGENDA

In computer vision, by saying we are enabling computers to “see,” we mean enabling machines and devices to process digital visual data, which can include images taken with traditional cameras, a graphical representation of a location, a video, a heat intensity map of any data, and beyond.

As you can see, computer vision applications are becoming ubiquitous in our day-to-day lives. We can find an object or a face in a video or in a live video feed, understand motion and patterns within a video, and increase or decrease the size, brightness, or sharpness of an image.

Scope

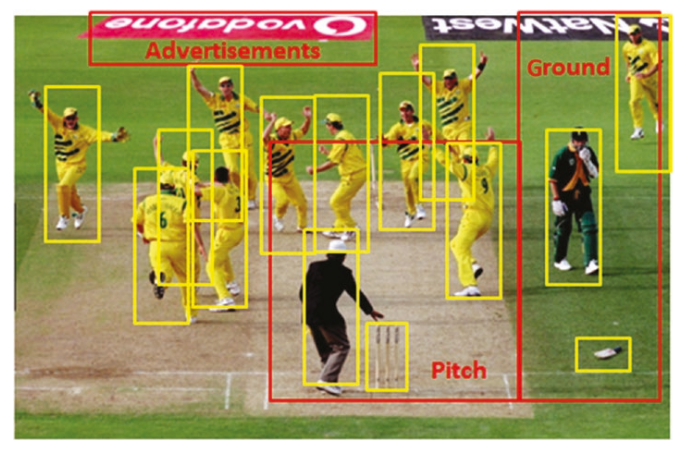

To understand what constitutes computer vision, look at the figure

Though you are looking this image for the first time, you can probably tell that this image is of the sport cricket being played on a bright day. Specifically, it is a match between teams Australia and South Africa, and Australia won the match. The overall mood is that of celebration, and a few players can be named either by recognizing their facial features or by reading the names printed on their shirts.

The information you can observe is complex for a computer vision application; this could be a set of multiple inferences. Let’s now map the whole human-driven interpretation to a machine’s vision processes.

Image Detection And Classification

You can observe objects such as grass/ground, people, cricket equipment advertisements, and sports uniforms. These objects are then grouped into categories. This process of extracting information is referred to as image detection and classification.

How Image Classification Works

In general, the image classification techniques can be categorised as parametric and non-parametric or supervised and unsupervised as well as hard and soft classifiers. For supervised classification, this technique delivers results based on the decision boundary created, which mostly rely on the input and output provided while training the model. But, in the case of unsupervised classification, the technique provides the result based on the analysis of the input dataset own its own; features are not directly fed to the models.

The main steps involved in image classification techniques are determining a suitable classification system, feature extraction, selecting good training samples, image pre-processing and selection of appropriate classification method, post-classification processing, and finally assessing the overall accuracy. In this technique, the inputs are usually an image of a specific object.

Convolutional Neural Networks (CNNs) is the most popular neural network model that is used for image classification problem.

Image Segmentation

At a high level, there is ground, and there is a pitch. While it is difficult to exactly pinpoint the boundaries of each, making the markings based on the objects within the image is possible. This process is referred to as image segmentation.

Taking this to the next level, you can get smarter and smaller boundaries that can help identify specific people and objects in the image. This can be observed with small boxes marked around each potential unique object, as shown in Figure.

We can divide or partition the image into various parts called segments. It’s not a great idea to process the entire image at the same time as there will be regions in the image which do not contain any information. By dividing the image into segments, we can make use of the important segments for processing the image.

Image segmentation creates a pixel-wise mask for each object in the image. This technique gives us a far more granular understanding of the object(s) in the image.

Object Detection

Taking this to the next level, you can get smarter and smaller boundaries that can help identify specific people and objects in the image. This can be observed with small boxes marked around each potential unique object, as shown in Figure.

Now, within each box, there could be people or different cricket-related objects. At the next level, you can detect and tag what each box contains, also shown figure . This process is called object detection .

The problem definition of object detection is to determine where objects are located in a given image such as object localisation and which category each object belongs to, i.e. object classification.

In simple words, object detection is a type of image classification technique, and besides classifying, this technique also identifies the location of the object instances from a large number of predefined categories in natural images.

Image Detection And Manipulation Process

Extending the above reading, you can look closely at the people’s faces and through the face recognition process exactly determine who each player is. You also can observe that each person is of different height and build.

Names on the back of the shirts of the players can be another source for determining who each player is. An optical character recognition (OCR) handwriting recognition process can recognize shapes and lines and infer letters or characters.

Depending on the color of the uniform, you can infer what type of match it is and what teams are playing. Identifying the colors of the pixels is part of the image detection and manipulation process.

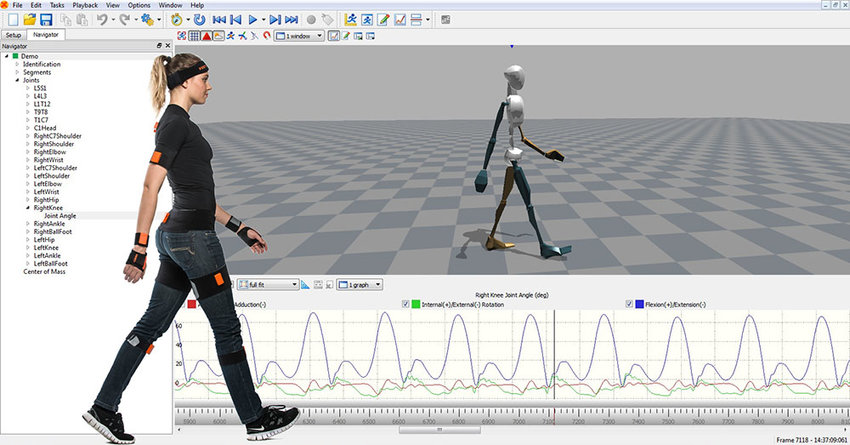

Motion Tracking

In the process of playing the game, movement of the ball can be tracked and the speed at which the ball strikes the bat can be computed or determined. The path the ball will potentially take can be determined as well. A few important calculations such as how many ball serves have hit a particular spot on the pitch can be computed. This is possible using a process called motion tracking .

Tracking is, in our context, the positional measurement of bodies (subjects or objects) that move in a defined space. Position and/or orientation of the body can be measured. If just X, Y and Z position are measured, we call this 3 degrees of freedom (3DOF or 3D) tracking. If position (3 coordinates) and orientation (3 independent angular coordinates) are measured simultaneously, we call this 6 degrees of freedom (6DOF or 6D) tracking.

There are various tracking systems, based on different measurement principles, available: e.g. mechanical trackers, magnetic trackers, optical trackers (VIS or IR), acoustic trackers and systems based on inertial or gyro sensors. Hybrid systems, combining different techniques, are also widely used.

Image Reconstruction

In continuation of our discussion of cricket team Figure sometimes the determination of whether the player is “in” or “out” is determined by his leg position while the player is striking the ball. To accurately determine this, images from different cameras set up at different angles need to be analyzed to identify the accurate position of the player’s leg.

Another example of Image Reconstruction is down below

This process is called Image reconstruction, where an object is compiled from different tomographic projects of the same object in different angles.

We will cover all the processes listed here in detail with hands-on examples in upcomming blogs.

And in the next blog you will read about Challenges of Computer Vision and Real-World Applications of Computer Vision.

So that is all in this blog, I hope you liked and understood somethings.

About the author

I’m Aryan Bindal Pursuing B.Tech. in CSE from Indian Institute of Information Technology, Sonepat . I am passionate about how Machine Learning can change our lives and makes our world smarter and better. I enjoy a good cup of coffee, watching good movies and T.V. shows. Playing video-games or do anything music related.

Reviews

If You find it interesting!! we would really like to hear from you.

Ping us at Instagram/@the.blur.code

If you want articles on Any topics dm us on insta.

Thanks for reading!!

Happy Coding